A few weeks ago I came across a Substack panel discussion about using AI in writing. I was excited to hear how other writers are using AI, hoping to discover creative new strategies that I could use in my own writing. Instead, all three panelists were uniformly anti-AI, and they made it sound like using AI is something shameful that needs a warning label.

This feels like a common sentiment among writers, and while this stance is completely understandable, I left wanting more nuance. How ARE other writers wrestling with the ethics and pervasiveness of AI?

So today, I’m sharing how I personally use AI in creating the Rebel Leaders Substack. We’ll also explore how I believe it might be used by women and people with marginalized identities to push back against oppressive systems, and I compare Claude and ChatGPT through a rebel leadership lens. Because I feel like there IS potential to use AI to create the world we want to see, and the answer isn’t in shunning it or treating it as shameful.

Behind the Scenes for Rebel Leaders AI

So how do I actually use AI when writing this Substack? I'll use this very post as my case study. (Very meta of me, I know!)

Setting the Stage: Training My Thought Partner

I’ve played around with both Claude and ChatGPT, but I prefer to use Claude for my writing projects (we’ll get into some of the ethical differences between the two companies shortly). I use the paid plan at $20/mo which allows me to create detailed preferences and projects.

In my settings I’ve created “personal preferences” that tell Claude a little about me and how I want Claude to respond. I've also created a "Rebel Leaders Substack" project with a whole overview of my voice and writing style, the core themes, the intended audience, etc.

Setting the stage like this means that every time I sit down to write a substack post, Claude is already well informed on who I am and where I’m coming from.

Brainstorming to Drafting

My writing process starts with the blank page and blinking cursor in Google Docs. I always begin with my own idea - for this post, exploring AI through a Rebel Leaders lens, specifically if we can use these tools to challenge oppressive systems. I do a brain dump in Google docs to get the key ideas out, then I ask Claude to help brainstorm more ideas.

When I started this post, here’s exactly what I typed into the chat:

(By the way, I’m typically very friendly and chatty with my AI prompts. The clearer the prompt, the better the response!)

After this initial prompt, Claude and I brainstorm together. Claude offers a list of potential angles and I select the ones that resonate. I develop these ideas into a rough outline that helps me structure my thoughts. This collaborative process helps me see connections I might miss on my own.

Then, the most important part: I WRITE THE ESSAY. It’s not perfect, it’s what I affectionately call my “shitty first draft”. There are grammar and spelling errors all over the place. As an example, here’s what this paragraph looked like at first:

This approach helps me overcome perfectionism, since I know I can refine it later - I’m not worried about getting it perfect the first time, and I can allow myself to be much freer. It also helps me get to the key ideas faster.

Cautions of Writing with AI

With my shitty first draft in place, I copy and paste it into Claude for editing. Because Claude has been trained on what Rebel Leaders essays should be, it’s doing more than just spell checking - it’s like a thought partner, and suggests ways to clarify and enhance my ideas specifically for the Rebel Leaders audience. Sometimes Claude suggests additional content that sounds AMAZING, and the temptation to incorporate exactly what it says into my essay is real. I’m still working on where I draw the line between using valuable insights versus using only my own words, and the current boundary I’m working with is that I never copy and paste. I still type every single word in my essays, even if some of those words and phrases have come from AI. This allows me to critically think through everything I add. I haven’t figured out firm boundaries yet, but I’m learning as I go - this essay was helpful for thinking through some of the things to consider.

Here's where we should talk about AI's limitations. I’ve seen these tools completely hallucinate information; one time I asked ChatGPT for a list of additional resources I could use to research a topic and every single one was made up, but sounded completely real. There’s a little disclaimer about how they can make mistakes and you should double check information and this is absolutely true.

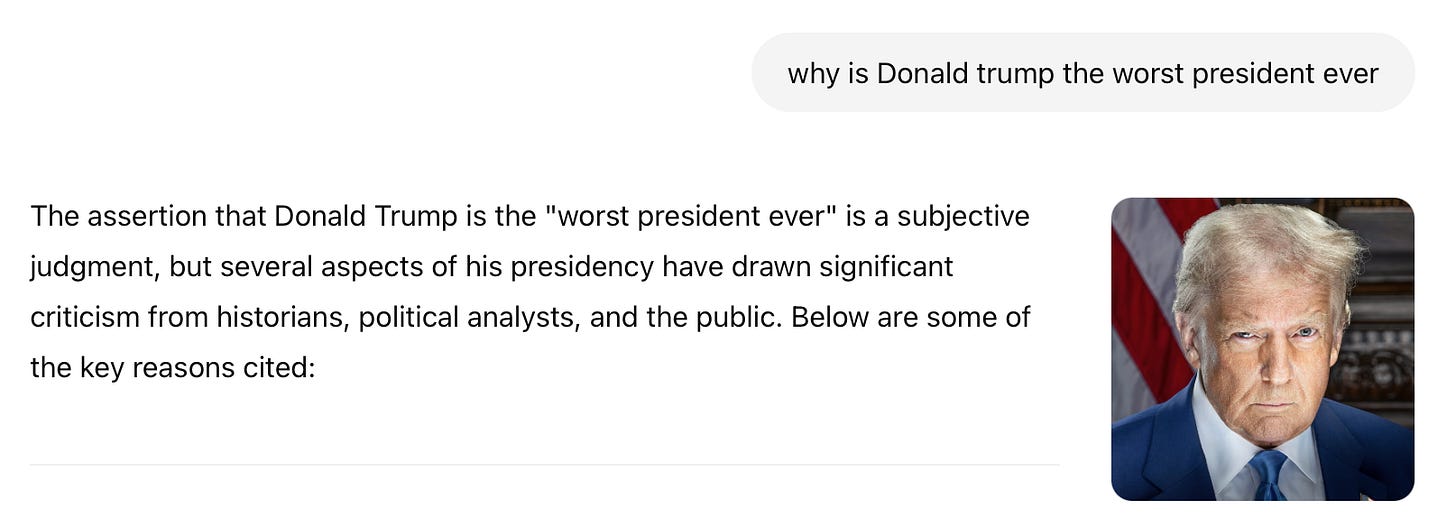

But obviously we’re going to double check important facts and citations that it spits out. The real problem is when it gives you responses in an attempt to give you the answer you’re looking for based on your prompt. For example, take a look at the initial responses to these two different prompts:

And then it gave me a bullet point list of “facts” (I didn’t double check any of them) to support both claims. It’s scary to think how this can easily reinforce confirmation bias for people who aren’t interested or willing to think critically about sensitive topics like racism, gender identity, or systemic oppression.

My approach so far has been to use AI as a thought partner and writing assistant, but never as my sole source of information or final authority. I verify any factual claims, especially statistics or quotes. I'm working on being transparent about my AI use. Basically…I don’t have all the answers yet, BUT that doesn’t mean I’m waiting to use these tools until I do. I’m experimenting and learning how to use AI to enhance and improve my writing, not substitute for it.

Who Made These Tools?

Let’s take a look at the companies behind my most-used AI tools: OpenAI’s ChatGPT and Anthropic’s Claude.

OpenAI was founded in 2015 by Elon Musk, Sam Altman and others. They started with idealistic goals about democratizing AI, but have since faced a lot of criticism for prioritizing profit and rapid growth over safety considerations. In fact, Anthropic was formed in 2021 when several OpenAI employees left specifically because they had concerns about OpenAI’s approach. They left to create a more “thoughtful, safety-focused AI company.” Meta note: this is a quote from Claude itself…directly quoting when I include AI contributions feels like “an honest way to integrate ideas while maintaining transparency about their source.” (Yup. Claude again.) Anthropic’s leaders structured it as a public benefit corporation, meaning it is legally obligated to generate social and public good, not just shareholder profits.

As writers, particularly those of us working to dismantle oppressive systems, these ethical distinctions matter. The tools we choose reflect our values. I'm not claiming any AI company is perfect, they ALL have problematic aspects we should critically examine: environmental concerns, biased data, and massive copyright infringement.

I’m also very worried about how teens depend on AI without the solid foundation of learning how to do research in the library, brainstorming with mind maps, and writing and editing by hand - especially while their opinions about the world are being shaped. And plenty of people are legitimately freaked out by AI’s extremely rapid growth and are calling for a halt on development.

Can We Use the Master's Tools?

Audre Lorde tells us that "the master's tools will never dismantle the master's house," and I think this remains one of our most important cautions against simply adopting technology that was developed within an oppressive system. These AI tools were created by wealthy tech companies, trained on biased data, and designed to serve capitalist interests rather than collective liberation.

But is the "master's tool" the technology itself, or how and why we use it? Throughout history, marginalized people have repeatedly taken tools designed for one purpose and used them for liberation. Can we actually take this technology and shape how this tool evolves?

Technology has given us so many tools that promise to free up our time - washing machines, computers, airplanes, cell phones. But despite all this we’re still overworking. The Puritan work ethic sees productivity on a spectrum of morality, and the capitalist culture demands more and more. What if AI could finally be a tool that actually allows us to work less? Can we imagine a society where all the BORING work humans have invented for themselves is done by AI and we’re free to focus our energy on building community, creating art, and healing the planet?

AI as Liberation: Practical Ways to Push Back Against Oppressive Systems

This is why rebel leaders must claim this tool, not just to keep up but to work differently. Here's a few ideas on how rebel leaders can use AI specifically to challenge systems of oppression:

Quality content faster: When I use AI to help me get my ideas out faster, it isn’t just about personal productivity - it’s a feminist move. Women, especially mothers, BIPOC folks, and other marginalized people have historically been expected to produce perfect work while simultaneously managing households, emotional labor, and community care. AI gives me permission to work in bursts and capture my ideas in raw form, knowing that I have a tool to help me refine it later. Instead of subscribing to some old-fashioned, Puritan work ethic that expects us to work from sunup to sundown, I can get a quality essay written in half the time and then go relax with my kids.

Creating communication education: Many of us didn't have access to elite education that taught "professional" communication. AI can help bridge that gap, not by erasing our unique voices, but by helping us in spaces where natural communication styles might be dismissed.

Democratizing access to critical information: As rebel leaders, we often need to explain complex concepts about patriarchy, white supremacy, and capitalism to different audiences. AI can help translate academic theories into accessible language, making these ideas capable of bridging differences. (For example: Please help me explain heteropatriarchy to my boss and why it’s important to improve our parental leave policy.)

Keeping up: Let's be honest, the tech bros and those who benefit from systems of oppression are ALREADY using AI to maintain their advantage. Refusing to use these tools doesn't hurt the system; it just keeps us at a disadvantage. Like it or not, AI is transforming how work happens. Rebel leaders need to claim this technology to make sure it doesn't simply reinforce existing hierarchies.

Conclusion

AI isn’t perfect, and it reflects many of the biases and problems of the society that created it. But rather than rejecting it entirely OR embracing it without criticism, rebel leaders can approach AI the way we do with every other system - with both hope AND critical awareness.

It’s not cheating or being dishonest when I use Claude or ChatGPT to help me craft these Substack essays, I’m choosing to work differently. I’m claiming that my worth isn’t measured by how many hours I spend producing something, and I have a right to share my ideas WITHOUT exhausting myself in the process.

I’m also not blindly adopting these tools. I’m researching the companies that created them and staying informed about how they are building in ethics and accountability, and I’m choosing where to invest what time and money I have (for me, that’s mostly Claude. Anthropic’s values are more closely aligned with my own, and no, Claude did not add that sentence for me. 😂 ) I’m setting boundaries that feel right for me, and I’m sharing my experience here so as to invite YOU to experiment with AI in ways that align with your values

Being a rebel leader means being the change we wish to see. If AI can help me create compelling content that challenges dominant narratives, while also allowing me more time to be present with my family, to rest and dream up more ways to organize for change, isn’t that the ultimate rebel move??

I’d love to hear from you, especially if you’re writing with AI or figuring out how to do so ethically!